How AI Foundation Models Are Revolutionizing Complex Reasoning: From Game-Playing to Mathematical Discovery

Introduction: The Pursuit of Advanced Artificial Intelligence¶

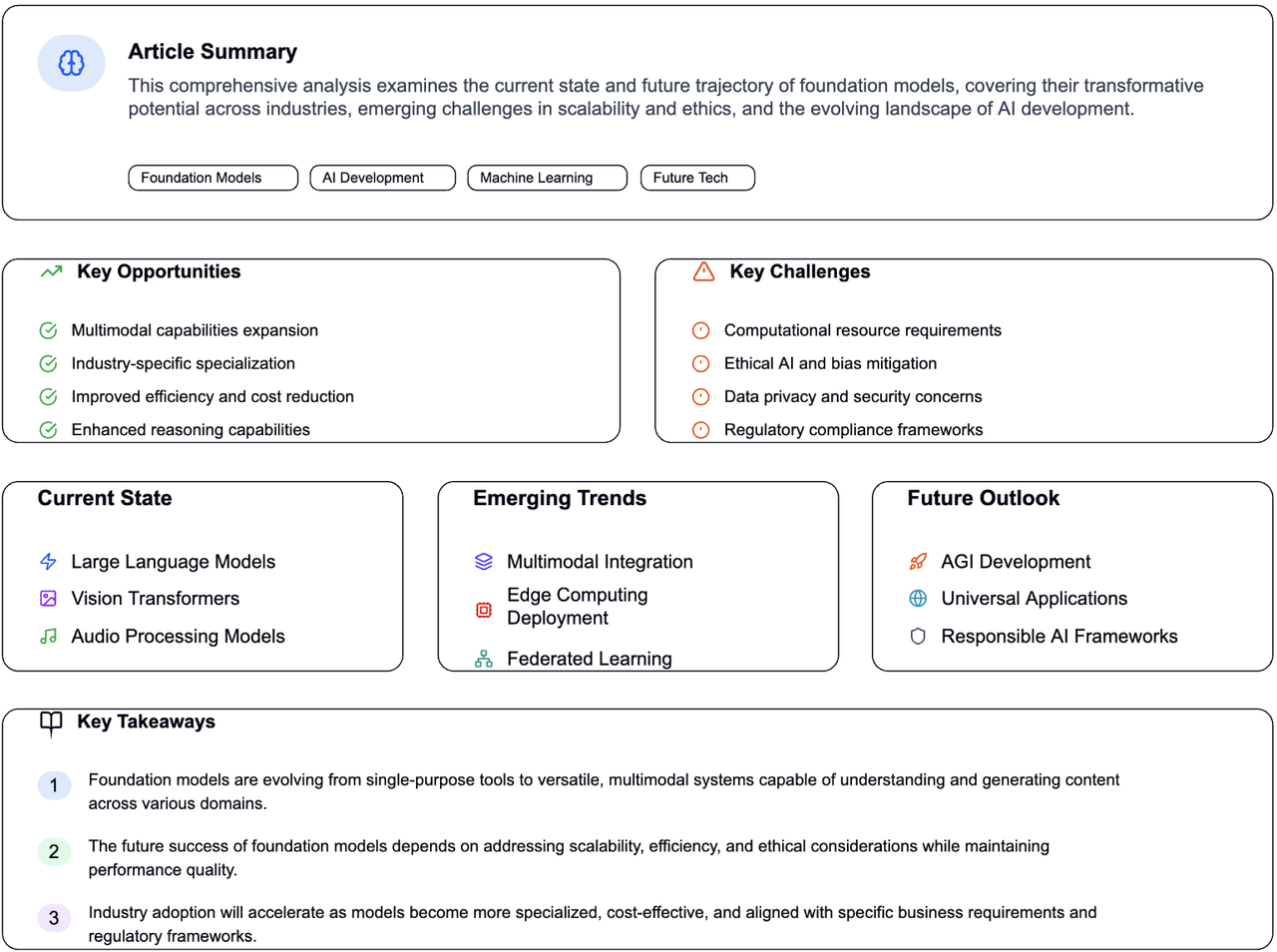

Envision a computer capable of solving a geometry problem in a manner akin to a human—not merely by memorizing formulas, but by visualizing shapes, identifying patterns, and making innovative connections. Modern AI foundation models are beginning to achieve this, representing a transformative development in how machines tackle complex reasoning tasks.

The advancement of artificial intelligence has consistently been fueled by ambitious objectives: creating machines that can engage in strategic games, uncover mathematical theorems, and solve problems that demand genuine insight rather than mere computational power. Today's foundation models signify a considerable advancement in this endeavor, merging pattern recognition capabilities with adaptable problem-solving skills.

The Evolution of AI Reasoning: From Symbol Manipulation to Data-Driven Learning¶

The Limitations of Symbolic AI¶

Traditional AI systems predominantly relied on symbolic reasoning—programming computers with explicit rules and logical operations. This approach is akin to providing someone with a detailed cookbook for every conceivable cooking scenario. While effective for well-defined problems, it quickly became cumbersome when confronted with the complexities of real-world situations.

For instance, early chess programs utilized brute-force search methods combined with manually crafted evaluation functions. Programmers encoded knowledge about advantageous chess positions, resulting in systems that were inflexible and limited in adaptability.

The Shift to Data-Driven Learning¶

Modern foundation models adopt a fundamentally different strategy. Rather than depending on pre-defined rules, these systems learn patterns from extensive datasets. This is comparable to the distinction between memorizing a phrasebook and genuinely learning a language—the latter is significantly more versatile and powerful.

Take AlphaGo as an example; it transformed the ancient game of Go by analyzing millions of games and even competing against itself, uncovering innovative strategies that astonished even expert players. This data-centric approach unlocked possibilities that traditional rule-based systems could not achieve.

Addressing Complex Reasoning Challenges¶

The Geometry Problem: An Insight into AI Reasoning¶

Let us explore a specific example that highlights the intricacies of reasoning tasks. Proving that the base angles of an isosceles triangle are equal may seem straightforward for a human but presents considerable challenges for AI systems.

The Human Approach:

- Visualize the triangle and its characteristics

- Recognize the symmetry inherent in the isosceles shape

- Make a creative leap by drawing an angle bisector from the apex

- Utilize congruent triangles to finalize the proof

The AI Challenge: At each stage, an AI system encounters numerous possibilities. It could draw various auxiliary lines, introduce different geometric constructions, or apply multiple theorems, resulting in an almost infinite search space, making it impractical to explore every option.

Foundation models excel in this regard. Instead of aimlessly navigating through all possibilities, they can learn to identify promising patterns and make informed decisions about which paths to pursue.

The Universal Nature of Reasoning Problems¶

Interestingly, similar structures manifest across diverse domains. Consider the following parallels:

Chemical Synthesis vs. Mathematical Proofs:

- Both involve constructing tree-like structures

- In chemistry: combining molecules to form compounds

- In mathematics: connecting logical steps to derive conclusions

- Both necessitate creative insights regarding which paths to follow

Software Development vs. Theorem Proving:

- Both begin with high-level objectives and decompose them into specific tasks

- Both require comprehension of abstract concepts and their tangible applications

- Both benefit from recognizing reusable patterns and methodologies

This universality implies that reasoning skills acquired in one domain can be applied to others, which is a significant advantage of foundation models.

The Three Pillars of Foundation Model Reasoning¶

1. Generativity: Crafting Solutions from the Ground Up¶

Traditional AI systems were confined to predicting from a limited set of options. In contrast, foundation models can generate entirely new solutions by modeling the probability distribution of successful approaches.

Analogy: This can be likened to the difference between answering multiple-choice questions and writing an essay. Multiple-choice questions restrict you to predefined options, while essay writing enables creative expression and original ideas. Foundation models function more like the essay approach, capable of generating unique constructions, innovative program code, or creative mathematical conjectures.

Real-World Applications:

- Drug Discovery: Generating new molecular structures with desired characteristics

- Software Engineering: Developing novel algorithms to address specific challenges

- Mathematical Research: Proposing new theorems and proof strategies

2. Universality: Cross-Domain Learning¶

A key strength of foundation models is their ability to learn general reasoning patterns applicable across various fields. This is similar to recognizing effective argument structures—once understood in one context, it can be applied to law, science, philosophy, or any other discipline.

Transfer Learning in Practice:

- Insights gained from analyzing code can assist in mathematical proofs

- Geometric reasoning capabilities can aid in molecular modeling

- Strategic thinking from game-playing can enhance optimization problem-solving

This cross-domain knowledge transfer significantly reduces the amount of training data needed for new tasks, resulting in more robust and adaptable solutions.

3. Grounding: Linking Symbols to Meaning¶

One of the most compelling capabilities of foundation models is their ability to connect abstract symbols to tangible understanding. When humans encounter the term "isosceles triangle," it conjures a visual image and geometric intuitions. Foundation models are beginning to form similar associations.

Multimodal Comprehension:

- Connecting mathematical equations to their graphical representations

- Linking programming code to its visual outcomes

- Associating chemical formulas with molecular structures

This grounding enables AI systems to reason more like humans do—not merely manipulating symbols but understanding the real-world significance of those symbols.

Current Applications and Success Stories¶

Mathematical Theorem Proving¶

Contemporary AI systems have demonstrated the ability to discover and prove mathematical theorems, occasionally producing proofs that are more concise and elegant than those developed by humans. These systems can:

- Generate conjectures by identifying patterns within mathematical data.

- Construct formal proofs in a systematic manner.

- Verify the accuracy of complex mathematical arguments.

Program Synthesis and Code Understanding¶

Foundation models have significantly impacted software development through:

- Code Generation: Producing programs based on natural language descriptions.

- Bug Detection: Identifying programming errors and recommending corrections.

- Code Translation: Converting code between various programming languages.

- Documentation: Automatically generating explanations for intricate code.

Scientific Discovery¶

In the fields of chemistry and materials science, AI systems are:

- Predicting optimal synthetic routes for novel compounds.

- Discovering new materials with specific desired properties.

- Accelerating drug discovery by identifying promising molecular candidates.

Challenges and Future Directions¶

The Data Scarcity Problem¶

In contrast to images or text, high-quality reasoning data is limited and costly to produce. Mathematical proofs, verified code, and scientific discoveries necessitate expert knowledge and thorough validation, creating a bottleneck in training advanced reasoning systems.

Potential Solutions:

- Synthetic Data Generation: Developing artificial yet realistic problems and solutions.

- Self-Supervised Learning: Enabling models to learn from the inherent structure of problems.

- Interactive Learning: Engaging human experts to facilitate the learning process.

The Need for High-Level Planning¶

Current foundation models are proficient at next-step predictions but face challenges with long-term strategic planning. Humans typically approach complex issues by:

- Establishing a high-level strategy.

- Decomposing it into manageable tasks.

- Implementing the detailed plan.

Equipping AI systems to operate at this architectural level remains a significant challenge.

Reliability and Verification¶

As AI systems enhance their autonomous reasoning abilities, ensuring their reliability becomes imperative. We require methods to:

- Confirm the correctness of AI-generated proofs.

- Validate that synthesized code performs as intended.

- Assess scientific discoveries before their practical application.

The Road Ahead: Implications and Opportunities¶

Education and Learning¶

Foundation models with robust reasoning capabilities have the potential to transform education by:

- Offering personalized tutoring that adjusts to individual learning preferences.

- Creating interactive environments for problem-solving.

- Generating practice problems customized to student requirements.

- Explaining complex concepts through diverse approaches.

Scientific Acceleration¶

By automating routine reasoning tasks while enhancing human creativity, these systems could significantly accelerate scientific advancements:

- Hypothesis Generation: Proposing innovative research avenues.

- Experimental Design: Refining experimental protocols.

- Literature Analysis: Detecting patterns across extensive research bodies.

- Collaborative Discovery: Fostering partnerships between humans and AI in research.

Ethical Considerations¶

As reasoning capabilities evolve, we must thoughtfully address:

- Transparency: Ensuring AI reasoning processes remain interpretable.

- Reliability: Developing systems that gracefully handle failures and acknowledge uncertainty.

- Human Agency: Preserving meaningful human involvement in critical decision-making.

- Equity: Guaranteeing fair distribution of benefits across society.

Conclusion: The Promise of Intelligent Partnership¶

The advancement of foundation models with advanced reasoning capabilities signifies more than a technological milestone; it heralds a future where AI systems can act as true intellectual partners rather than merely tools.

These systems will not replace human reasoning but will enhance it, managing routine cognitive tasks and allowing humans to concentrate on creativity, judgment, and meaning-making. Future geometry students may collaborate with AI tutors capable of visualizing problems in various ways, suggesting alternative proof strategies, and providing personalized guidance.

As we continue to explore the frontiers of AI reasoning, we are not simply developing smarter machines; we are forging new avenues for collaboration between humans and artificial intelligence to address the intricate challenges that lie ahead.

The evolution from rule-based systems to today’s foundation models has been extraordinary, yet we remain in the early phases of this transformation. The coming years will likely introduce even more advanced reasoning capabilities, unlocking possibilities that are currently beyond our imagination.

It is clear that the future of problem-solving will rely on a partnership between human insight and artificial intelligence, combining the strengths of both to confront challenges that neither could overcome independently.

Citation: Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., ... & Liang, P. (2022). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258. Available at: https://arxiv.org/abs/2108.07258