The Early Days of AI: Understanding Our Current Landscape¶

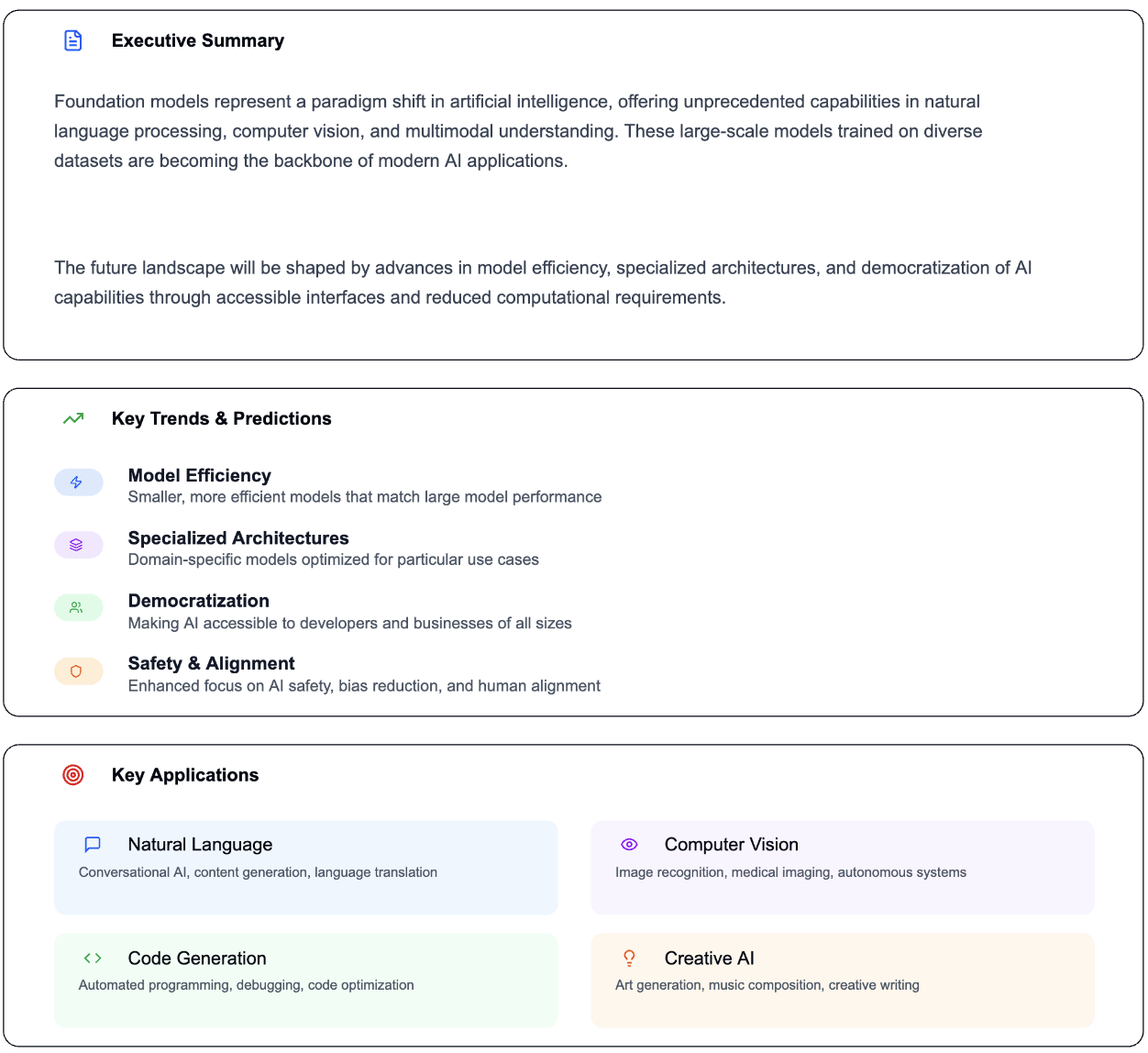

While recent advancements like ChatGPT have made headlines, we are still in the initial phase of the foundation model revolution. Picture it like the internet in 1995—we recognize the immense potential, yet we are still navigating the necessary rules, standards, and best practices.

At this moment, these advanced AI systems function as "research prototypes" available to the public. It's akin to taking experimental vehicles for a spin on public roads—thrilling but accompanied by uncertain risks and outcomes.

A Crucial Inquiry: Who Will Guide AI's Future?¶

The evolution of foundation models prompts a vital question that will influence technological advancements for the next decade: Who will steer the development of AI? The answer will impact various facets of society, from job markets to democratic processes.

The Divide: Industry vs. Academia¶

The Current Landscape: Industry's Dominance¶

Presently, the AI sector is primarily influenced by a select group of powerful players:

Major Tech Companies:

- Google: Innovators behind PaLM and Bard

- Microsoft/OpenAI: Creators of the GPT series and ChatGPT

- Meta (Facebook): Developers of LLaMA models

- Amazon: Expanding various AI services

AI Startups:

- OpenAI: Groundbreaking yet increasingly commercial

- Anthropic: Dedicated to AI safety

- AI21 Labs: Focused on specialized language models

Reasons for Industry Leadership:

- Extensive computational resources: Training costs can reach millions.

- Access to user data: Billions of interactions enhance model performance.

- Expert engineering teams: Specialists dedicated to full-time work.

- Rapid development: Ability to move swiftly without academic constraints.

The Importance of Academia: Why Universities Matter¶

Universities offer something that the industry often overlooks: a variety of perspectives and a commitment to the public good.

Academic Strengths:

- Interdisciplinary collaboration: Computer scientists partner with ethicists, sociologists, and economists.

- Long-term focus: Not motivated by short-term profits.

- Commitment to public interest: Striving for societal benefit.

- Diverse student representation: Reflecting a wide range of backgrounds and viewpoints.

Example: While a tech firm may aim to make its chatbot more engaging for increased user retention, a university team might investigate how to develop AI assistants that foster healthy digital habits.

Balancing Incentives: Market Forces vs. Social Good¶

When Profit Aligns with Public Benefit¶

At times, commercial interests can lead to beneficial outcomes for everyone:

Positive Scenarios:

- Enhanced accuracy: Companies desire dependable AI, leading to better performance for users.

- User-friendly designs: Intuitive AI tools promote wider adoption and accessibility.

- Efficiency gains: Faster, cost-effective AI benefits both businesses and consumers.

The Pitfalls of Profit-Driven Development¶

However, profit-centric approaches can create significant blind spots:

The "Malaria Problem": Similar to how pharmaceutical companies may neglect malaria treatments due to low profitability in affected regions, tech companies might overlook AI applications that aid marginalized communities lacking financial return.

Real-World Consequences:

- Digital divide: Advanced AI tools may only be accessible to affluent users.

- Language biases: AI focuses on profitable languages, sidelining smaller communities.

- Accessibility issues: Limited development for users with disabilities.

- Environmental neglect: Energy-intensive training practices may be disregarded if costs are externalized.

Example: An AI firm might invest millions in perfecting a shopping assistant for luxury goods while neglecting tools to support social workers aiding homeless populations.

The Accessibility Challenge: The Shift Away from Openness¶

The Open AI Research Era (2010s)¶

There was a time when AI research was becoming more open and collaborative:

Key Features:

- Open-source frameworks: Tools like TensorFlow and PyTorch made AI more accessible.

- Shared datasets: Facilitated collaborative building on previous work.

- Reproducible research: Enabled verification and enhancement of studies.

- Community standards: Conferences required reproducibility protocols.

Outcome: This openness fostered rapid innovation, allowing researchers globally to collaborate and compete fairly.

The Current Closed Landscape: Challenges with Foundation Models¶

Foundation models have reversed this trend towards openness:

Access Limitations:

- API-only access: Models like GPT-3 are only available through controlled interfaces.

- Lack of model releases: Complete systems are frequently kept confidential.

- Opaque datasets: Training data is often not shared with researchers.

- High computational demands: Training now necessitates corporate-level budgets.

Illustrative Example: Imagine if only car manufacturers were allowed to test new safety features, while independent safety researchers were barred from accessing crash test data. This scenario mirrors the current state of AI research.

The Scale Factor: Understanding the Importance of Size¶

The Emergence of Capabilities¶

Certain AI capabilities only manifest at large scales:

In-Context Learning Example:

- Smaller models: Unable to learn new tasks from contextual examples during interactions.

- Larger models: Gain this ability once they reach a sufficient size.

- Research Implication: Studying certain phenomena requires billion-dollar models.

Analogy: Understanding weather patterns is akin to studying hurricanes—you can't grasp the full picture by only observing small clouds; you need satellite-level data.

Academic Strategies and Their Limitations¶

Current Approaches:

- Research on smaller models: Attempting to extrapolate insights to larger scales.

- Analysis of existing models: Focusing on released models rather than training new ones.

- Collaborative efforts: Initiatives like EleutherAI pooling resources together.

Challenges of These Strategies: Attempting to comprehend ocean currents by only examining puddles, or researching automotive safety using only bicycles, illustrates the inadequacy of these methods. Certain phenomena can only be understood at scale.

The BERT Dilemma: Over-Reliance on a Single Model¶

A significant amount of contemporary AI research is founded on BERT, a specific Google model, leading to several challenges:

Risks of Dependency:

- Arbitrary design choices: BERT's specific architecture influences all subsequent research.

- Ingrained biases: All derivative work inherits BERT's limitations.

- Innovation restrictions: Researchers may optimize for BERT's structure instead of exploring alternatives.

Example: This situation is comparable to modern architecture relying solely on modifications of a single building design, rather than exploring a range of fundamentally different approaches.

Exploring Potential Solutions: Creating an Equitable Landscape

Government Investment: Embracing the "Big Science" Model

Historical Precedents:

- Hubble Space Telescope: Paved the way for groundbreaking discoveries unattainable with smaller instruments.

- Large Hadron Collider: Demonstrated the necessity of global collaboration and substantial funding.

- Human Genome Project: Showcased the benefits of public investment in essential research.

AI Counterpart: Establishing a National Research Cloud could equip academic researchers with the computational power necessary for training and analyzing large foundational models.

Envisioning This Initiative:

- Shared Computing Infrastructure: Universities would gain access to high-performance computing resources.

- Open Datasets: Publicly funded and ethically curated training data available for all.

- Collaborative Platforms: A space for researchers globally to contribute and share knowledge.

Volunteer Computing: Making AI Development Accessible

Successful Initiatives:

- Folding@home: Engaged millions of home computers to simulate protein folding.

- SETI@home: Conducted a distributed search for extraterrestrial intelligence.

- Bitcoin Mining: Illustrated the potential of distributed computing on a massive scale.

AI Application: The Learning@home project seeks to harness volunteer computing power from personal devices around the world to train foundational models.

Challenges to Consider:

- Network Limitations: Home internet connections may not have the capacity for extensive data processing.

- Coordination Complexity: Synchronizing training efforts across millions of devices can be intricate.

- Security Concerns: Safeguarding volunteer nodes from potential breaches is crucial.

The Importance: Why This Matters for Everyone

Scenario 1: A Future Dominated by Industry If current trends persist, we may see:

- Concentration of Power: A limited number of companies controlling AI development.

- Commercial Bias: AI systems designed with profit as a priority over public good.

- Restricted Innovation: A lack of diverse approaches and perspectives.

- Democratic Concerns: Essential technologies managed by unaccountable entities.

Scenario 2: A Future with Academic Balance With adequate investment in academic research, we could achieve:

- Diverse Development: Multiple methodologies and philosophies in AI design.

- Public Interest Focus: AI created for the benefit of society at large.

- Open Innovation: Shared tools and knowledge fostering rapid advancement.

- Democratic Oversight: Public institutions playing a role in guiding AI development.

Real-World Consequences

Education: Current risk: AI tutoring systems tailored for engagement rather than deep learning. Alternative: AI designed to foster thorough understanding and critical thinking skills.

Healthcare: Current risk: AI diagnostic tools accessible only to affluent hospitals. Alternative: Open-source AI tools available to community health centers.

Employment: Current risk: AI deployment focused on reducing costs at the expense of worker well-being. Alternative: AI systems aimed at enhancing human capabilities rather than replacing them.

The Path Ahead: Collaboration Over Competition

What Industry Offers:

- Resources and Scale: The capacity to train and implement large models.

- Engineering Expertise: Skills for managing complex systems.

- Real-World Deployment: Experience with applications for consumers.

- Speed and Agility: Rapid development and refinement.

What Academia Contributes:

- Ethical Perspective: Insight into social implications.

- Disciplinary Diversity: Varied viewpoints on intricate issues.

- Long-Term Vision: Research extending beyond immediate commercial interests.

- Public Accountability: Commitment to transparent, peer-reviewed studies.

The Ideal Collaboration The future of foundational models should be characterized by:

- Shared Infrastructure: Government-funded computing resources for research, and open datasets through public-private partnerships.

- Ethical Integration: Involvement of social scientists and ethicists from the onset, along with public feedback on AI design.

- Balanced Innovation: Merging industry efficiency with academic integrity, ensuring commercial applications align with public interest.

Conclusion: A Crucial Moment We find ourselves at a pivotal point in AI development. The choices made in the coming years will shape whether foundational models serve all of humanity or cater primarily to a select few.

The stakes are immense. These models will significantly influence:

- Our methods of working and learning.

- Our access to information and decision-making processes.

- The functionality of democratic institutions.

- The distribution of economic opportunities.

Achieving success requires acknowledging that this challenge extends beyond technical aspects—it's a social, ethical, and political issue that necessitates a diverse array of human expertise and perspectives.

The future of AI should not be dictated solely by those with the most resources. Instead, it should be crafted by a variety of voices collaborating to ensure these powerful technologies benefit the common good.

The pressing question is not whether foundational models will reshape society, but rather if that transformation will be advantageous for everyone or just a privileged few. The outcome hinges on the decisions we make today.

citation: Bommasani, Rishi, et al. "On the Opportunities and Risks of Foundation Models." arXiv preprint arXiv:2108.07258 (2021) arXiv: https://arxiv.org/abs/2108.07258