Introduction: A Global Vision and a Growing Challenge¶

In the year 2000, world leaders at the United Nations Millennial Summit came together with a powerful vision for the future. They declared that education was the "foundation for human fulfillment, peace, sustainable development, economic growth, decent work, gender equality and responsible global citizenship." This wasn't just a feel-good statement; it became a concrete goal, a promise to "ensure inclusive and quality education for all and promote lifelong learning." But let's be honest, that's a huge mountain to climb. Providing high-quality, inclusive education on a massive scale is incredibly difficult, and the costs are skyrocketing.

We're all feeling the pinch. In the United States, for example, student loan debt has ballooned to a staggering $1.6 trillion, which is more than our total credit card debt. That’s a huge symptom of a broken system where the cost of education is growing faster than our economy can keep up. We're facing a massive gap between the demand for education—especially with the rising need for adult retraining—and our ability to provide it. And unfortunately, this gap hits some demographics harder than others, creating concerning disparities in achievement. But what if there was a way to close this gap?

Foundation Models: A Beacon of Hope in the Digital Age?¶

With the rise of the digital age, we've seen promising progress with computational approaches to learning. Artificial Intelligence (AI) has shown its potential to help both students and teachers. We've seen AI systems that can give meaningful feedback to students, help teachers improve their methods, and even create personalized learning experiences that adapt to a student's unique needs. This is all great, but there's a big problem.

So far, most of these AI solutions have been like custom-made suits—they’re designed for one specific task. They require a ton of data to be collected from scratch, which is expensive and time-consuming. It’s simply not feasible to create a custom solution for every single educational task out there. But what if we could use a single, powerful tool for a variety of tasks and subjects?

This is where foundation models come in. Think of them as a versatile Swiss Army knife for AI. They are large-scale models trained on vast amounts of data, which gives them a general understanding of a subject matter and a range of capabilities. We're already seeing foundation models make an impact in education. For example, a model called MathBERT is being used for "knowledge tracing," which is the challenge of tracking a student's understanding over time. Another model, GPT-3, is being used to help provide feedback on open-ended tasks like coding questions. These are just the beginning, and they make us wonder: can foundation models bring about even more transformative changes?

The Ethical Minefield of AI in Education¶

Before we get carried away with all the cool possibilities, we have to pump the brakes and think about the ethics. The goal of education isn't just to transfer information; it's deeply tied to long-term social impact. When we introduce a disruptive technology like foundation models into this space, we have to be incredibly thoughtful about the consequences.

Unseen Biases: A Silent Threat¶

Just like with any AI, foundation models can have biases. If the data used to train the model has biases, those biases will be amplified and reflected in its decisions. Imagine a model that's meant to provide feedback to students but consistently misinterprets the work of students from certain backgrounds because the training data didn't represent them well. This could have huge implications for equity of educational access. It's a bit like a funhouse mirror—small distortions in the input data can lead to hugely distorted outcomes.

The Human Element: Removing Teachers from the Loop?¶

One of the big goals of AI in education is to increase productivity. You can almost hear the decision-makers thinking, "If AI can teach more effectively, maybe we don't need as many human teachers!" But what would happen if we removed teachers from the equation? Could a system optimized purely for "learning" have negative effects on a student's socio-emotional development? Teachers are a crucial modulating force, a support system that an AI might not be able to replicate. We are already seeing a rise in loneliness among younger generations, and the classroom is often a place where students learn essential social skills and find community. Could a future dominated by AI tutors make this worse?

Academic Integrity: The "Who Did the Work?" Problem¶

As AI becomes more powerful, it will get much harder for teachers to figure out how much of a student's work is their own. For example, GitHub CoPilot, an AI pair-programmer, is already out there. How will this change computer science education? A beginner programmer might struggle with a fundamental concept, but CoPilot could make it trivial. This could undermine the learning experience and make it difficult to assess a student's true understanding. It's the same kind of challenge we've faced before with things like calculators in math class or Google Translate in language courses. The key is to figure out how these tools can coexist with traditional instruction, not replace it.

Privacy and Security: Protecting Our Students¶

Student privacy is a huge deal. Laws like the Family Education Rights and Privacy Act (FERPA) in the United States protect student information, and the Children's Online Privacy Protection Act (COPPA) provides extra layers of protection for kids under 13. These laws can create a big hurdle for AI researchers who need large amounts of student data to train their models. There's also a serious concern that the training data—which could contain private student information—could somehow leak from a foundation model. These are not small issues, and they are challenges that researchers will need to solve before these models are widely adopted.

Foundation Models and Student Understanding: The Feedback Challenge¶

Now that we’ve covered the ethical considerations, let’s dive into a specific, powerful application of foundation models: understanding student thought. If we want to build genuinely helpful AI tools, we first have to understand the learner we're trying to help. This is particularly important for open-ended tasks like writing, drawing diagrams, or writing code. It's easy for an AI to grade a multiple-choice test, but how does it grade a short story? This is what we call the "feedback challenge."

Beyond Right or Wrong: The Art of "Noticing"¶

To give effective feedback, an AI needs two core capabilities:

- Subject matter expertise: It needs to understand the topic, whether it's physics, coding, or history.

- Diagnostic ability: It needs to "notice" why a student made a mistake. "Noticing" is a technical term in education for inferring the reasons behind an error.

The problem is that in a typical classroom, there just isn't enough data for an AI to learn these capabilities from scratch. This is where foundation models can shine.

Adapting for Subject Matter¶

Foundation models are great at the first part of this challenge. They are trained on a massive amount of data, so they have a baseline understanding of a wide variety of subjects. With just a few examples, a foundation model like GPT-3 can quickly adapt to understand what good code looks like or what a well-written paragraph should contain. We can also adapt these models using other sources of data like math textbooks, podcasts for spoken language understanding, or even historical student answers from educational platforms. This unified approach allows the models to comprehend a subject through richer, more diverse information sources.

The Next Frontier: Training Models to "Notice"¶

The second capability—the ability to "notice"—is much more difficult. It's one thing to know that the answer "315" is wrong for the question "what is 26 + 19?" but it's another thing entirely to know why the student answered that way. (They likely added the digits separately: 2+1=3 and 6+9=15, and then put them together.) This is the kind of insight a human teacher has, and it’s what allows them to correct a misconception rather than just marking the answer as wrong. Training a foundation model to do this is a huge challenge, but it's not impossible. Researchers are exploring ways to creatively use publicly available data, like interactions on StackOverflow, to help models diagnose student mistakes.

Beyond Diagnostics: Foundation Models for Instruction¶

Understanding a student is only the first step. The next is providing high-quality instruction. This is where things get even more interesting. Providing effective instruction requires another layer of understanding: pedagogy. This is the art and science of teaching. It includes everything from asking Socratic questions to using encouraging language and providing analogies that connect with a student's interests.

The Language of Teaching¶

Teachers don't talk like the average person on the internet. They are trained to speak with respect and to help students form a positive identity with what they are learning. We saw the dangers of not accounting for this with Microsoft's Twitter bot "Tay," which started spouting hate speech within 24 hours of being live. If we want to create an AI that can teach, we need to adapt it to the "language of teaching." This could involve training models on data from sources like lecture videos, recorded office hours, or even carefully curated lesson plans.

The Power of Analogy: Learning from the Real World¶

Remember how babies learn language? They don't just listen to words; they see the world. Their caretakers point to objects while they talk about them. This "grounded" learning is powerful, and foundation models can replicate this through multi-modal data. Imagine an AI that can integrate text, images, and audio. It could teach you Swahili and point out that the word for 8, "nane," is a "false friend" because it sounds like the English word for 9, "nine." Or, it could show you a diagram and explain a complex physics concept with a real-world analogy. This kind of rich, contextual learning is something that foundation models are uniquely positioned to provide.

Conclusion: A Balanced Path Forward¶

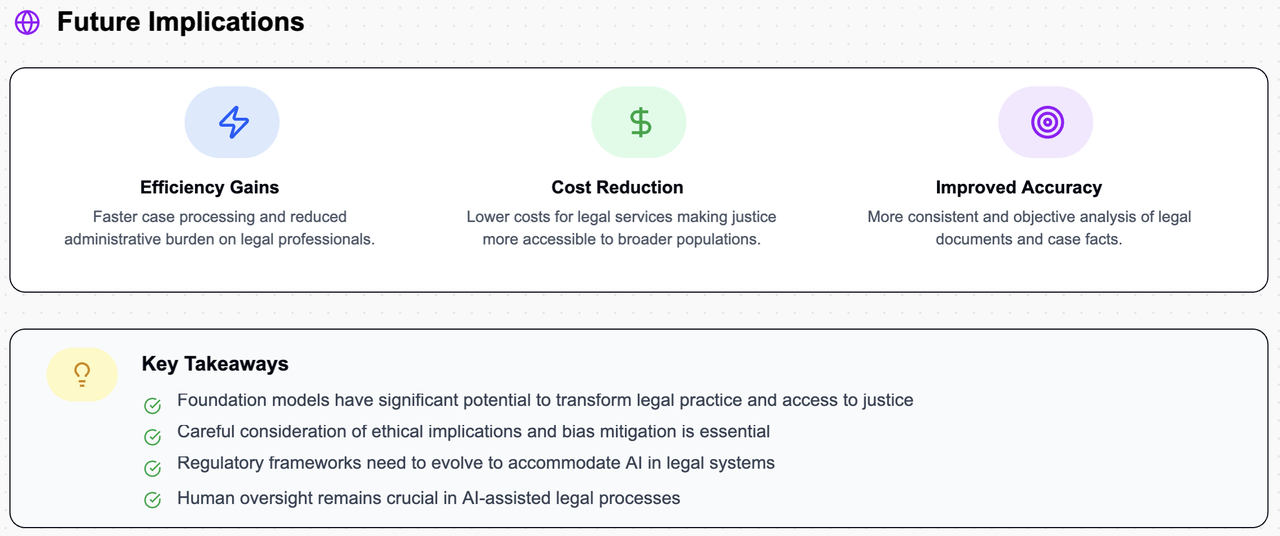

There's no doubt that foundation models are an exciting new frontier for education. They have the potential to make high-quality learning more accessible and to help teachers provide more personalized support. But we have to be smart about it. The path forward isn't to simply replace teachers with AI. It's about using these powerful tools to augment human capabilities, to free up teachers to focus on the socio-emotional development of their students, and to provide learning experiences that are both effective and equitable. We need to be vigilant about the ethical considerations, from bias to privacy, and ensure that these models are developed with the student's best interests at heart.

FAQs¶

What is a foundation model in education? A foundation model is a large-scale AI model trained on a vast amount of data from various sources (like text, images, or code) to gain a general understanding of a subject. In education, these models can be adapted to perform multiple tasks, such as diagnosing student mistakes, providing feedback, or generating instructional content, without needing to be built from scratch for each specific purpose.

How can foundation models address the rising cost of education? By providing a reusable, general-purpose approach, foundation models can help reduce the cost and difficulty of developing new educational tools. Instead of creating custom solutions for every task, a single foundation model can be adapted for many different subjects and challenges, potentially making high-quality educational resources more affordable and scalable.

What are the main ethical concerns with using AI in schools? Key ethical concerns include the potential for data bias to reinforce inequalities, the risk of removing human teachers from the learning process which could impact students' social skills, challenges with academic integrity as students use AI tools, and significant privacy and security issues related to student data.

Will foundation models and AI replace teachers? No, it's highly unlikely that AI will replace teachers. The goal is to use these tools to augment the teacher's role, not to replace it. AI can handle tasks like providing basic feedback or adapting content, freeing up teachers to focus on the human aspects of education, such as mentoring, fostering socio-emotional skills, and building a positive classroom environment.

How can we ensure that AI in education is equitable? Ensuring equity requires intentional effort. This includes training models on diverse and inclusive data, actively working to identify and mitigate biases, and making sure that the benefits of these technologies are available to all students, regardless of their demographic or socioeconomic background. We must ask, "Who benefits?" and design our systems to ensure everyone does.

Citation: Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., ... & Liang, P. (2022). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258. Available at: https://arxiv.org/abs/2108.07258