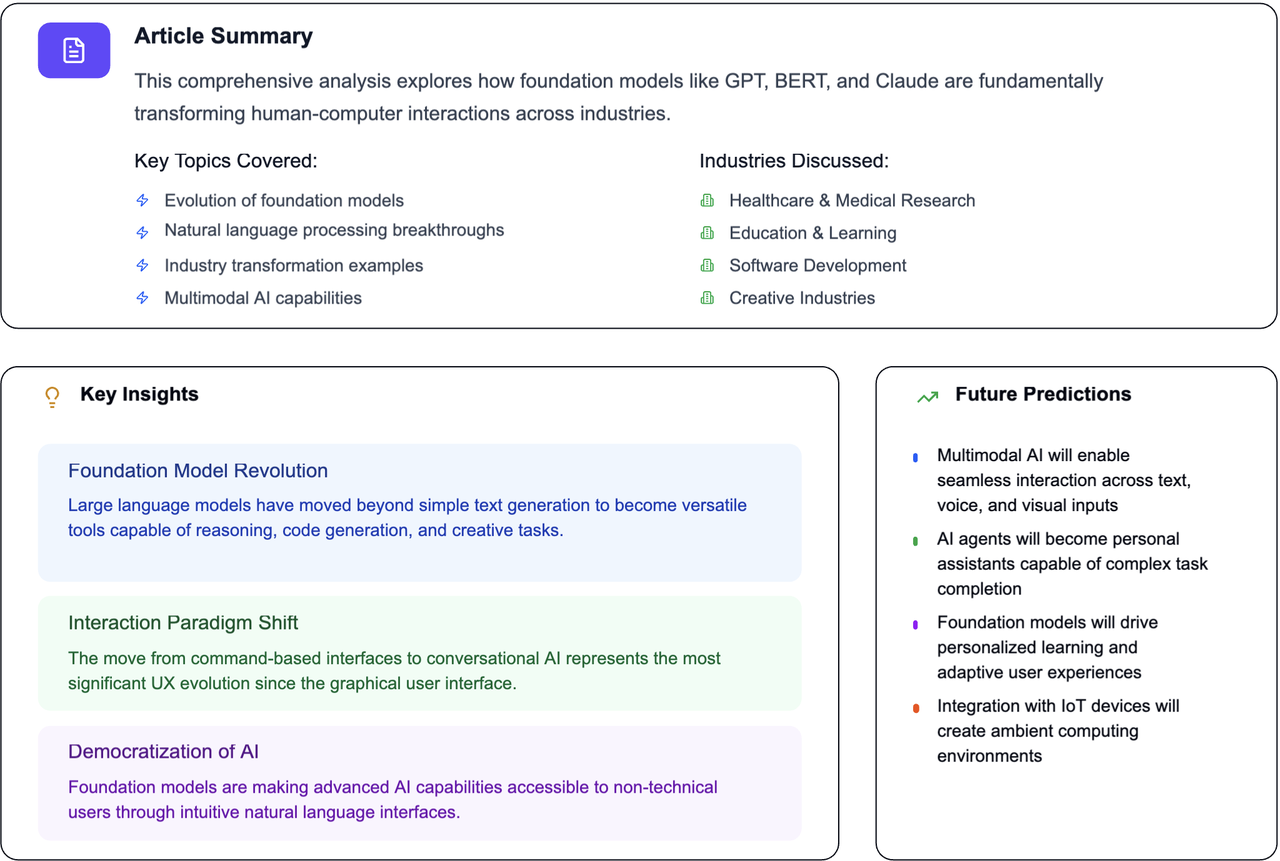

Have you ever wondered how artificial intelligence is evolving right before our very eyes? It's no secret that AI is changing the world, but the pace of innovation is truly breathtaking. We're talking about a fundamental shift in how we, as humans, interact with technology, and it's all thanks to something called "foundation models."

Think about it: remember when AI was mostly confined to highly specialized tasks, requiring tons of expertise to even get started? Well, those days are rapidly becoming a distant memory. Today, we're witnessing a paradigm shift, a movement towards AI that's more accessible, more versatile, and dare I say, more human in its interactions. This isn't just about making things a little bit easier; it's about unlocking entirely new possibilities for what we can achieve with AI, whether you're a seasoned developer or just someone curious about what the future holds. We're on the cusp of a truly interactive AI era, and I'm excited to walk you through how foundation models are at the heart of it all.

Stepping into the Future: What Exactly are Foundation Models?¶

So, what's the big deal with these "foundation models" everyone's talking about? Well, think of them as the superheroes of the AI world – the ones with incredible versatility and power. You might have already encountered their early forms, like GPT-3, which can generate human-like text, or DALL·E, which conjures up amazing images from simple descriptions. These aren't just one-trick ponies; they're incredibly adaptable, able to handle a wide range of tasks and seamlessly integrate different forms of data, from plain text to stunning visuals.

What makes them so revolutionary? It's their ability to serve as a foundation (hence the name!) upon which countless AI-infused applications can be built. Imagine having a super-smart generalist that can quickly learn and adapt to new situations. That's essentially what foundation models offer. As these models continue to mature, their capabilities will only expand, pushing the boundaries of what's possible and fundamentally changing how we interact with AI on a daily basis. We're talking about a future where rapidly prototyping and building highly dynamic and generative AI applications becomes not just a possibility, but a reality for many.

This isn't just some abstract concept; it has profound implications for two key groups of people: the application developers who will leverage these models to craft our digital experiences, and us, the end-users, who will interact with and be shaped by these AI-powered creations. And here's the kicker: in some truly fascinating scenarios, the traditional divide between developers and users might even begin to blur, opening up exciting new avenues for co-creation and personalized AI experiences. Ready to dive deeper? Let's go!

Bridging the Gap: Empowering Developers with Foundation Models¶

Now, let's chat about how these foundation models are changing the game for the folks who build our AI-powered applications – the developers. If you've ever tried to create something with AI, you know it can be a bit of a marathon. It often involves tons of data, serious computing power, and a whole lot of specialized skills. This can make the process of designing novel and truly positive human-AI interactions incredibly challenging. It's like trying to bake a gourmet cake from scratch every single time you want a slice – a lot of work!

The traditional approach often clashes with the iterative prototyping process that's so crucial for understanding and meeting user needs. We want to build something that people genuinely find useful and enjoyable, and that usually requires a lot of trial and error, feedback loops, and adjustments. But when the cost of each iteration is so high, it can stifle innovation and make it difficult to truly nail that user experience. This is where foundation models enter the scene, ready to be a game-changer.

The Iterative Dance: Prototyping and User Needs¶

Imagine trying to build a house without ever checking if the design actually works for the people who will live in it. Sounds a bit silly, right? Well, that's kind of what traditional AI development can feel like sometimes. Designing novel and truly positive human-AI interactions has historically been a bit of a tightrope walk. Why? Because the sheer volume of data, the immense computing resources, and the specialized skills needed to craft a powerful, task-specific AI model often clash with the nimble, iterative prototyping process that's absolutely essential for truly understanding and satisfying user needs and values. It's like trying to build a skyscraper with the agility of a tiny cottage – nearly impossible!

This friction means that developers often have to make compromises, potentially sacrificing the fine-tuning that makes an AI truly delightful or intuitive for a user. We've seen some fantastic progress in areas like interactive machine learning and frameworks for communicating AI uncertainty to users. But let's be honest, there's still a mountain to climb. The good news? Foundation models are like a powerful new set of tools in the developer's toolbox, ready to help us overcome many of these long-standing obstacles. They offer the tantalizing promise of significantly lowering the "difficulty threshold" for application development, making it easier for even non-ML experts to quickly prototype sophisticated AI applications. It's like having a master builder at your side, ready to lay the foundation so you can focus on the unique architectural flourishes.

Taming the Beast: The Challenge of Unpredictability¶

Now, here's a little secret: even with all their brilliance, AI models can be a bit like a wild card. Their responses can be, well, unpredictable, and they can churn out a vast array of generative outputs. This makes it a real head-scratcher for developers trying to build a clear "mental model" of how the AI will perform. It's like trying to understand the full range of notes a virtuoso can play without ever hearing them practice – you're left guessing! This unpredictability is further compounded by the sheer generative power of foundation models. While their "high ceiling" means they can achieve incredible things, it also means they can be even more complex and, at times, more challenging to manage than their single-purpose AI predecessors.

Recent research has highlighted these difficulties, showing that getting models like GPT-3 to consistently perform a specific task can be a real puzzle. Understanding their full capabilities is still an active area of exploration, and honestly, we're all still learning! So, what's a developer to do? Well, to make AI-infused applications more reliable and trustworthy, future work needs to really dig into how we can achieve more predictable and robust behaviors from these foundation models. This could involve techniques like fine-tuning, or for language-based interactions, clever prompt-engineering or calibration. The goal is to "wrangle" these powerful models into forms that are more manageable, allowing developers to harness their immense power without constantly feeling like they're trying to catch lightning in a bottle.

A New Dawn for Users: Transforming Our AI Experiences¶

Beyond the realm of developers, how will foundation models shake up the way we, the end-users, interact with AI-infused applications? This is where things get really exciting, because these models aren't just making life easier for creators; they're fundamentally altering our digital experiences. For a long time, the guiding principle for user-facing AI has been about "augmenting" human abilities, not replacing them. Think of it like a really smart co-pilot, helping you fly the plane, not taking over the controls entirely. And guess what? This philosophy isn't going anywhere. We'll still prioritize maintaining user agency and reflecting their values in AI-powered applications.

However, the forms these interactions take are about to diversify dramatically. Why? Because of the sheer generative and multi-modal capacities of foundation models. Imagine creating stunning multimedia content with just a few intuitive specifications, even if you're a complete novice. That's already starting to happen! From collaborative writing tools to text-to-image generators and even AI-powered code completion for programmers, we're seeing the early glimpses of a new frontier. But this is just the beginning.

Beyond Augmentation: Unlocking New Interaction Paradigms¶

For a long time, the guiding principle for user-facing AI has been about "augmenting" human abilities, not replacing them. Think of it like a really smart co-pilot, helping you fly the plane, not taking over the controls entirely. And guess what? This philosophy isn't going anywhere. We'll still prioritize maintaining user agency and reflecting their values in AI-powered applications. The benefits of AI taking initiative versus waiting for direct user manipulation will still need careful consideration. Moreover, incorporating user values directly through participatory and value-sensitive design processes, which actively involve all stakeholders, will remain paramount.

However, the actual forms of interactions that are attainable may dramatically diversify due to foundation models' powerful generative and multi-modal capacities. We're already witnessing early generations of foundation model-powered software tools that empower even novice content creators to generate high-quality multimedia from coarse, intuitive specifications. Imagine a budding musician providing thematic material for a song, and an AI generating it in the style of their favorite band! Or a small business owner describing their product, and an AI creating an entire website from those simple descriptions. This isn't science fiction anymore; it's becoming our reality. Moreover, foundation models will enrich static multimedia, automatically remastering old content or generating unique experiences for each player in a video game. We might even see completely new forms of multi-modal interactions using interfaces that blend visual and gesture-based commands. The possibilities are truly endless, limited only by our imagination.

The Ripple Effect: Societal Implications of AI-Powered Interactions¶

As we revel in the magic of AI-powered innovations, it's absolutely crucial to hit the pause button and ponder the broader societal implications. How will these powerful new applications change the very fabric of our communication? Will AI models start writing our emails for us? If so, how will this reshape our trust, credibility, and even our sense of identity, knowing that the words might not be entirely our own? How will it alter our writing styles? Who will truly own the authorship of AI-generated content, and how might this shifting responsibility be misused?

These aren't just academic questions; they are vital considerations for the future we are building. What are the long-term impacts of foundation models on our work, our language, and our culture? It's important to remember that these models are trained on observed data, which doesn't necessarily tell us about cause and effect. So, how can we ensure that the use of foundation models leads us to a desired future, rather than simply repeating the patterns and biases of the past? While these issues aren't entirely new to AI, the accelerating power of foundation models means they will be amplified and become far more prevalent. We're seeing glimpses of this future already in applications like AI Dungeon, Microsoft PowerApps, and GitHub CoPilot. As we continue to envision new forms of interaction, it becomes increasingly important to think critically about the potential implications for individuals and society, ensuring we maximize the positive impact and mitigate any unintended consequences.

The Blurring Lines: When Users Become Developers¶

Hold on to your hats, because here's a concept that's genuinely mind-bending: what if the rigid wall separating AI developers and everyday users starts to crumble? For ages, if you wanted to create a bespoke AI model, you needed a PhD in machine learning, access to supercomputers, and enough data to fill an ocean. It was an exclusive club, right? And while generic AI models might suffice in some situations, we've seen countless examples where they simply fail to meet the unique needs and values of specific users or communities.

Think about an online community trying to moderate comments. What's considered "problematic" in one community might be perfectly acceptable, or even encouraged, in another. A one-size-fits-all AI model just won't cut it. Similarly, AI-powered tools designed for a general population might fall flat for users with different abilities or needs without the ability to quickly adapt. While some promising research has explored how end-users might "co-create" AI models by providing data or parameters, these efforts have largely focused on simpler models. But what if foundation models could change all that?

Empowering the Non-ML Expert: A New Era of Customization¶

This is where foundation models truly shine a spotlight on a revolutionary concept: empowering individuals who aren't machine learning gurus to actively shape AI to their specific needs. Imagine a world where you don't need to be a coding wizard to make AI work precisely how you want it to. If foundation models can effectively lower the difficulty barrier for building AI-infused applications, they could create a golden opportunity to much more tightly link user needs and values with the models' behaviors. This would be achieved by allowing users themselves to become active participants in the development process.

Consider this: recent work has shown that models like GPT-3 can perform classification tasks with very few examples, or even with no examples at all, simply by being given a clear task description in natural language. This is powerful! An online community, for instance, could leverage this capability to create custom AI classifiers that filter content based on their own agreed-upon norms. No more relying on a generic, out-of-the-box solution that might miss the nuances of their unique culture.

Tailoring AI: Addressing Community-Specific Needs¶

Think about online communities, for example. What's considered "problematic" in one forum might be perfectly fine in another. A generic AI model designed to filter content could easily miss the mark when applied to communities with wildly different norms and cultures. Imagine a science community where seemingly mundane anecdotes are rejected if they're not backed by scientific research, while an entirely different community might tolerate content that's considered "Not Safe For Work." The current rigid model of AI development simply doesn't allow for this kind of granular customization.

However, with foundation models, we could see a complete shift. An online community could leverage the powerful few-shot or zero-shot capabilities of models like GPT-3 to create their own bespoke AI classifiers. This means they could define what constitutes "problematic content" based on their specific community guidelines, culture, and values, all by simply providing natural language task descriptions. No more square pegs in round holes! Furthermore, the advanced in-context learning capabilities of foundation models could allow AI-powered applications to optimize their interfaces on a per-user basis. This opens up exciting possibilities for tackling long-standing challenges in human-computer interaction, such as finding the perfect balance between user direct manipulation and automation in mixed-autonomy settings. It's truly a future where AI adapts to us, rather than the other way around.

The Double-Edged Sword: Opportunities and Responsibilities¶

While this newfound power is undeniably exciting, it's also a double-edged sword. The ability for communities to create highly customized AI classifiers, for instance, could be incredibly beneficial for content moderation, ensuring online spaces truly reflect their members' values. But what if this power is misused? What if such a capability is exploited to silence certain voices or to promote narrow, exclusionary viewpoints within a community? These are not trivial concerns; they are critical ethical considerations that we must actively address as this technology evolves.

Of course, realizing this potential for blurring the lines between users and developers won't be without its challenges. We'll need to work diligently to mitigate existing biases within foundation models, ensuring they don't inadvertently perpetuate harmful stereotypes or discriminatory practices. Furthermore, making these models truly robust and manageable for non-ML experts will be a significant undertaking. Understanding the full capacities and mechanisms of foundation models can be difficult even for seasoned machine learning professionals, so imagine the complexity for someone with limited experience! This could lead to unexpected pitfalls in the development cycle. Future research must explore how foundation models can be integrated into interactive machine learning frameworks, focusing on how to empower even those without extensive ML knowledge to leverage these models effectively and responsibly. Nonetheless, the opportunity for end-users to actively participate in developing AI-infused applications is a truly exciting prospect, one that could usher in a completely new paradigm for human-AI interaction.

Navigating the Future: Challenges and Opportunities Ahead¶

As we stand on the precipice of this AI-driven revolution, it's clear that while the opportunities are vast and exhilarating, there are also some significant hurdles we need to overcome. It's not all sunshine and rainbows; there are real challenges we must address to truly harness the power of foundation models responsibly. We've talked about the incredible potential, but now let's ground ourselves in the reality of what it will take to get there.

Building Trust and Robustness: Essential for Widespread Adoption¶

Imagine an AI that consistently surprises you with its unpredictable behavior. Would you trust it with important tasks? Probably not, right? This is one of the key challenges with foundation models: their immense generalizability and "high ceiling" for capabilities can also make them incredibly complex and, at times, difficult to wrangle. While they can perform a wide array of tasks, ensuring they consistently perform the intended task, and do so reliably, is a monumental effort. It’s like owning a super-powered car that can go anywhere, but you're not entirely sure if it will always take you where you want to go. This unpredictability can erode user trust and hinder widespread adoption.

To truly build reliable and trustworthy AI-infused applications, we need to focus future research on achieving more predictable and robust behaviors from foundation models. This could involve innovative fine-tuning techniques, or for natural language interactions, advanced prompt-engineering strategies that guide the model more effectively. We also need to explore methods for "calibrating" these models, ensuring their outputs are aligned with human expectations. Ultimately, the goal is to make these incredibly powerful tools more manageable and transparent, even for those without deep machine learning expertise. Only then can we unlock their full potential and integrate them seamlessly into our daily lives.

The Human Element: Ensuring AI Aligns with Our Values¶

Beyond the technical intricacies, there's a deeply human dimension to this evolution. As AI becomes more deeply embedded in our lives, we must ensure that these powerful models align with our values and serve humanity's best interests. This isn't just a nice-to-have; it's a fundamental necessity. Foundation models, by their very nature, are trained on vast amounts of existing data, which, unfortunately, can sometimes reflect societal biases and problematic viewpoints. When generative capabilities are at play, these biases can be amplified, leading to outputs that might surprise, disappoint, or even harm users and communities.

This places a significant burden on the groups and individuals utilizing foundation models. We have a responsibility to continuously monitor their behavior and, to the extent possible, adapt them to act in appropriate and ethical ways. This means actively engaging in processes like participatory design and value-sensitive design, ensuring that diverse voices and values are integrated throughout the development and deployment lifecycle. We need to ask ourselves tough questions: How will these models impact our communication, our work, our language, and our culture in the long term? How can we ensure that the use of foundation models leads us to a desired future, rather than simply repeating or amplifying the less desirable aspects of our past? The answers to these questions will shape the very nature of our interaction with AI for generations to come.

Conclusion: A New Era of Human-AI Collaboration¶

So, there you have it. We've journeyed through the incredible landscape of foundation models and their profound impact on how we interact with artificial intelligence. From empowering developers to transforming user experiences and even blurring the lines between creators and consumers, these models are undeniably ushering in a new era. We're moving beyond mere augmentation towards a future where AI becomes a more intuitive, versatile, and deeply integrated part of our lives, allowing for unprecedented levels of creativity and customization.

While the path ahead comes with its share of challenges – ensuring robustness, mitigating biases, and thoughtfully considering societal implications – the opportunities are simply too compelling to ignore. This isn't just about technological advancement; it's about reshaping our relationship with technology, fostering a new kind of collaboration between humans and machines. The future of interaction with AI is not just bright; it's dynamic, personal, and incredibly exciting. It’s a future where AI truly works with us, for us, and sometimes, even as us.

Frequently Asked Questions (FAQs)¶

Q1: What exactly is a "foundation model" in simple terms? A1: Think of a foundation model as a highly versatile and powerful AI generalist. Instead of being trained for one specific task, it's trained on a massive amount of diverse data, allowing it to understand and generate content across many different domains (like text, images, or even code). This broad understanding makes it a "foundation" upon which many different AI applications can be quickly and easily built.

Q2: How do foundation models help developers create AI applications faster? A2: Traditionally, building AI applications required extensive data collection and training from scratch, which is time-consuming and resource-intensive. Foundation models significantly lower this "difficulty threshold" by providing a pre-trained base. Developers can then fine-tune these models for specific tasks with much less data and effort, drastically speeding up the prototyping and development process. It's like having a pre-built engine for your car, so you just need to focus on designing the rest of the vehicle!

Q3: Will foundation models make AI interactions more natural for everyday users? A3: Absolutely! Foundation models, especially language-based ones, can understand and respond to natural language commands, making AI feel much more intuitive and conversational. They can also generate diverse and high-quality outputs across different modalities (like creating images from text), leading to richer and more engaging user experiences that feel less like interacting with a machine and more like collaborating with an intelligent assistant.

Q4: How might foundation models blur the line between AI developers and users? A4: Because foundation models can be easily adapted with natural language prompts or minimal data, they empower non-technical users to customize AI behaviors to their specific needs. Imagine an online community designing its own content moderation AI based on its unique rules, without needing a team of machine learning experts. This means users can become active participants in shaping the AI they use, rather than just passively consuming pre-built applications.

Q5: What are some of the main concerns or challenges with foundation models? A5: While incredibly powerful, foundation models present challenges. Their vast generative capabilities can sometimes lead to unpredictable or biased outputs if the underlying training data contained biases. Ensuring their robustness, transparency, and aligning their behavior with human values are crucial ongoing efforts. There are also important questions around authorship of AI-generated content and the long-term societal impacts on work and culture.Write your post here.

Citation: Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., ... & Liang, P. (2022). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258. Available at: https://arxiv.org/abs/2108.07258